Over the last year the workflows vs. agents debate has turned from a niche engineering question into something every product team seems to argue about. New tooling lowers the barrier to spin up an AI agent that can call tools in a loop, so leaders start asking whether they should rebuild processes around these agents, or stick with the safer structure of step-by-step workflows. The conversation heats up because both sides have real wins and real failure modes: agents feel magical when they solve fuzzy, open-ended tasks, but they can be slow, costly, and unpredictable at scale; workflows are efficient and auditable, but can feel rigid when the job needs exploration. That tension between flexibility and control is why this topic is hot and why teams keep getting stuck.

My take is that this isn’t an either–or question. The best systems use both. You can think of agents as steps inside a larger workflow. The workflow gives you structure - the order of tasks, the checkpoints, and the hand-offs - while each step can be as simple or as intelligent as needed. Some steps just run fixed rules; others can reason, use tools, or loop until they find an answer. This way, you adjust how much “thinking power” each part gets. The overall structure stays solid, but you add intelligence only where it truly adds value.

Flexibility, reliability, cost, and latency aren’t global trade-offs, they’re local ones. Each step can be constrained or exploratory depending on what’s needed. By defining this balance upfront, you avoid turning the whole process into an expensive reasoning loop, while keeping it adaptable where it matters. Workflows handle the predictable paths; agents handle the uncertainty.

The same principle makes workflows faster, cheaper, and easier to debug. When you know the path, you don’t need the AI to re-think it each time. You can reuse context, skip redundant reasoning, and inspect errors node by node instead of digging through opaque traces. It’s a cleaner separation of logic and learning and it scales better in production.

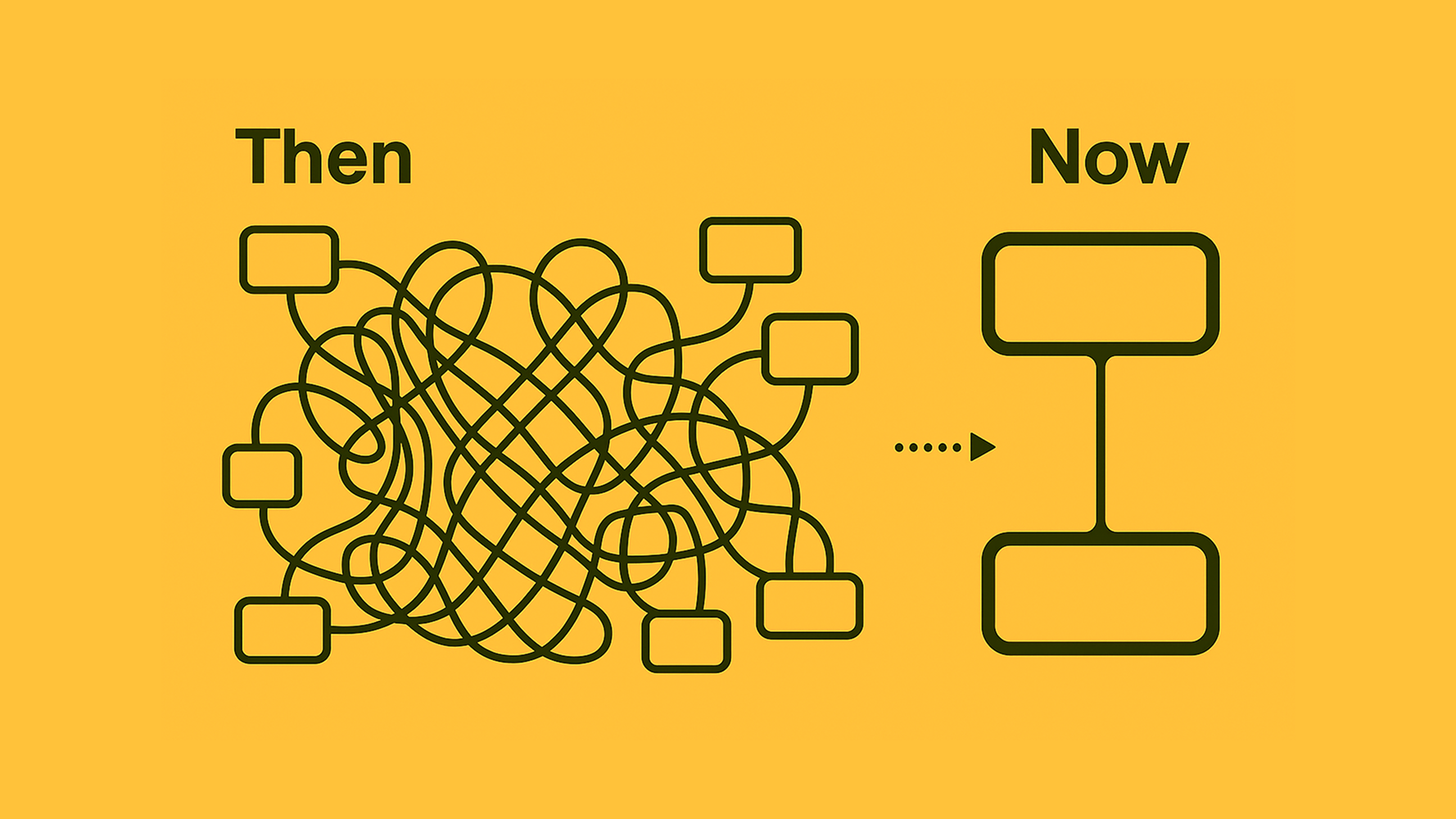

There’s a fair concern that workflows become spaghetti. That’s true if you model every micro-step as its own box and arrow. The good news is we no longer have to do that. Each node can carry an appropriate level of abstraction: what used to be twenty brittle boxes can become one Research & Synthesis node with a clear contract, a few guardrails, and a test suite. Natural-language configuration helps, but the important part is owning the interface inputs, outputs, guarantees so domain experts and engineers can reason about the system without spelunking through prompts.

Put together, the pattern looks like this: start with the workflow that matches the business process; decide where determinism is required and where exploration is acceptable; give the exploratory steps agent capabilities and budgets; surround them with simple checks, caching, and fallbacks; measure each node so you can improve the weak links without rewriting the whole thing. You end up with a decision engine that is understandable, auditable, and still smart where it needs to be.

If you re stuck in the agents or workflows argument, reframe the question. You don t have to choose a team. Build the rails with workflows, then drop in agents where judgment and discovery matter. That s how you get systems that scale in production without giving up the creativity that makes AI useful in the first place.